Over the last couple of years, I have been having fun experimenting with ways to streamline my developer experience. This may largely stem from my Developer Experience (DevX) focus as a software / platform engineer in the CarGurus DevX organization. (side note: Check out this blog post I wrote on how we supercharged the experience for our Product Engineers)

However, the challenges that I face at work and on my side projects aren’t quite the same as the ones faced by CarGurus product engineers. I started to find myself drowning in a sea of scattered commands, scripts, and tools. This, combined with my desire to find opportunities to learn more about Go patterns and libraries in a low-risk way, motivated me to invest some free time developing an “integrated development platform” - or at least the foundations for one!

Enter flow: my open-source task runner and workflow automation tool. What started as a simple itch to scratch has evolved into the foundations of a comprehensive platform for wrangling dev workflows across projects. Looking back, I realize it would have been easier to just migrate everything into a tool like Taskfile or Just, but my vision for my own personal platform doesn’t stop at the CLI. Taking that route also would have left my learning desires unmet. While much of my influence for flow comes from cloud-native projects and ideals, I’ve approached it from a local-first perspective - one where repeatable “micro-workflows” can be pieced together however you desire; making them easily discoverable, automated, and observable.

It’s ambitious, but that’s what excites me! There’s so much experimenting and learning ahead. This is my first blog post, but if this interests you, please return! I’ll be using it to document my learnings and progress on this project and some of my other side projects.

Building the Foundation

My first build of flow centered around two simple concepts driven by YAML files:

- Workspaces for organizing tasks across projects/repos

- Executables for defining those tasks

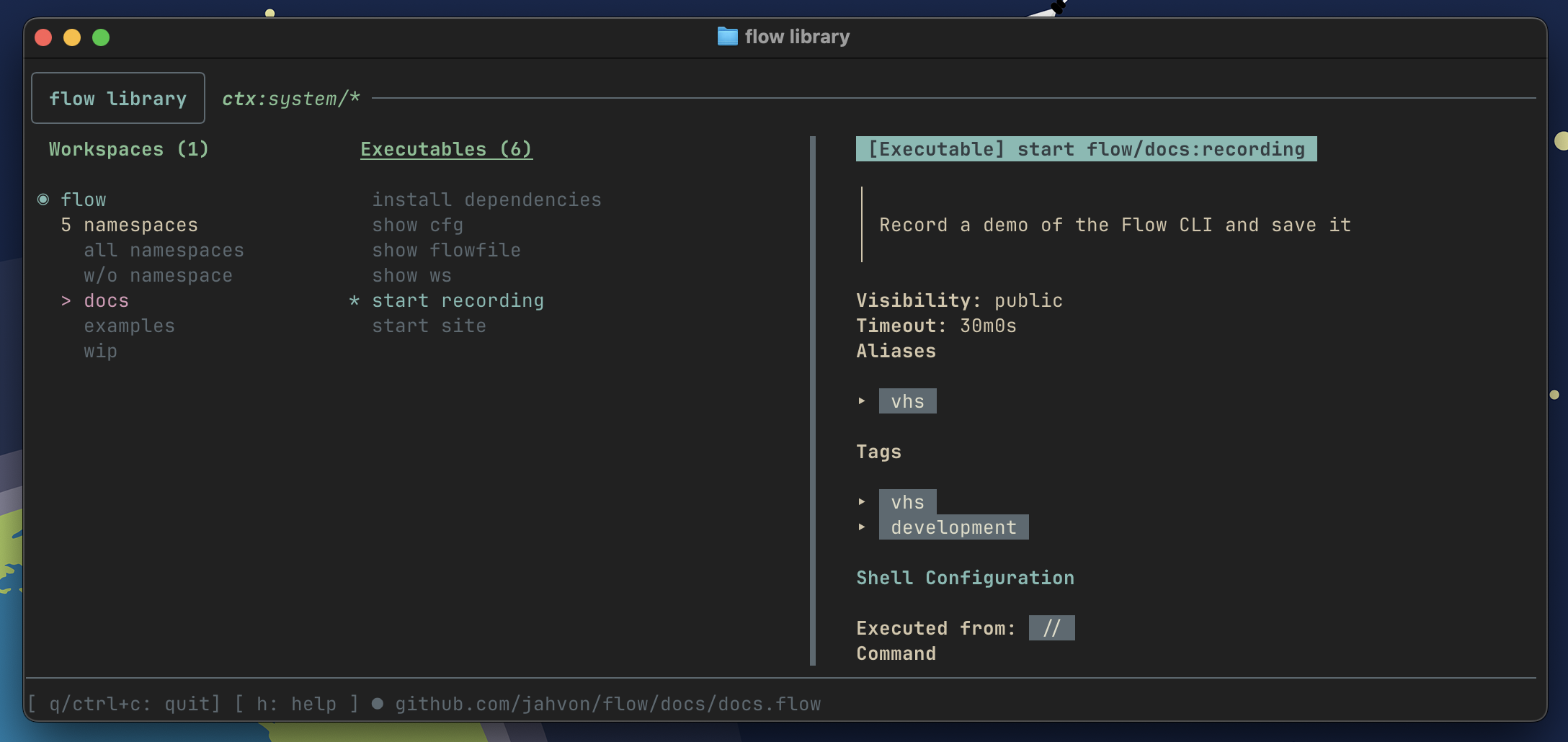

I included a simple tview terminal UI implementation to simplify the discovery of workspaces and executables across my system. While I’ve since moved away from that library, it helped me conceptualize much of the current TUI.

Through usage, I found myself iterating a lot. As I onboarded more workspaces and as those workspaces grew in complexity, flow’s feature set had to grow. My favorite components to implement have been the bubbletea TUI framework, an internal documentation generator for the flowexec.io site, the templating workflow, and the state and conditional management of serial and parallel executable types. ’ll dive deeper into those in follow-up posts, but I invite you to explore the guides at flowexec.io for a complete overview of where flow stands today.

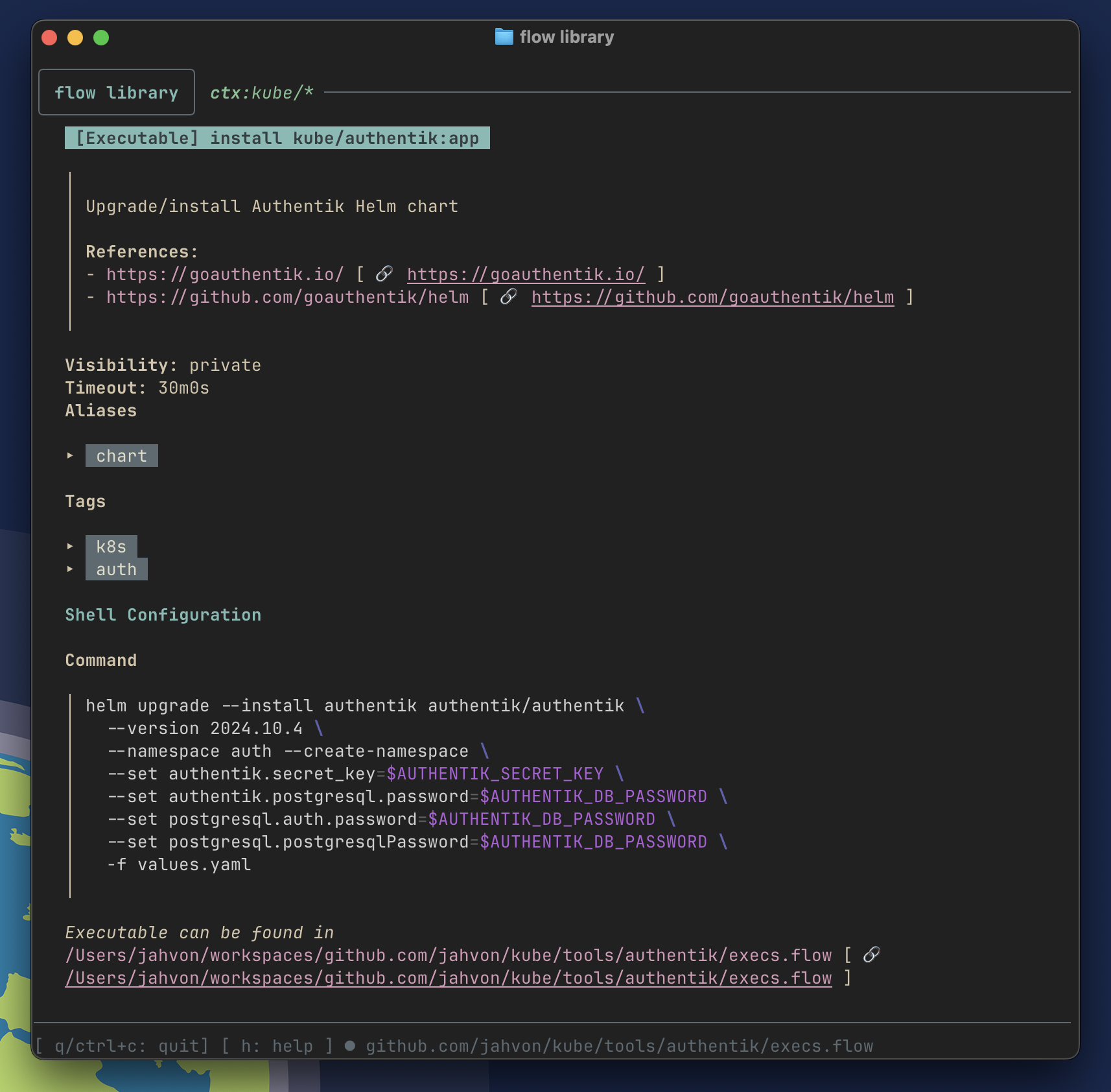

At it’s core, the flow CLI is a YAML-driven task runner. Here is an example of a flow file that I have for my Authentik server deployed in my home cluster; it uses the exec executable type to define the command that’s run:

namespace: authentik # optional, additional grouping in a workspace

tags: [k8s, auth] # useful for filtering the `flow library` command

# this description is rendered as markdown (alongside other executable info)

# when viewing in the `flow library`.

description: |

**References:**

- https://goauthentik.io/

- https://github.com/goauthentik/helm

executables:

# flow install authentik:app

- verb: install

name: app

aliases: [chart]

description: Upgrade/install Authentik Helm chart

exec:

params:

# secrets are managed with the integrated vault via the `flow secret` command

- secretRef: authentik-secret-key

envKey: AUTHENTIK_SECRET_KEY

- secretRef: authentik-db-password

envKey: AUTHENTIK_DB_PASSWORD

cmd: |

helm upgrade --install authentik authentik/authentik \

--version 2024.10.4 \

--namespace auth --create-namespace \

--set authentik.secret_key=$AUTHENTIK_SECRET_KEY \

--set authentik.postgresql.password=$AUTHENTIK_DB_PASSWORD \

--set postgresql.auth.password=$AUTHENTIK_DB_PASSWORD \

--set postgresql.postgresqlPassword=$AUTHENTIK_DB_PASSWORD \

-f values.yaml

The flow CLI provides a consistent experience for all executable runs and searches. This includes automatically generating a summary markdown document viewable with the flow library command, log formatting and archiving, and a configurable TUI experience.

This will show up in the flow library as rendered markdown:

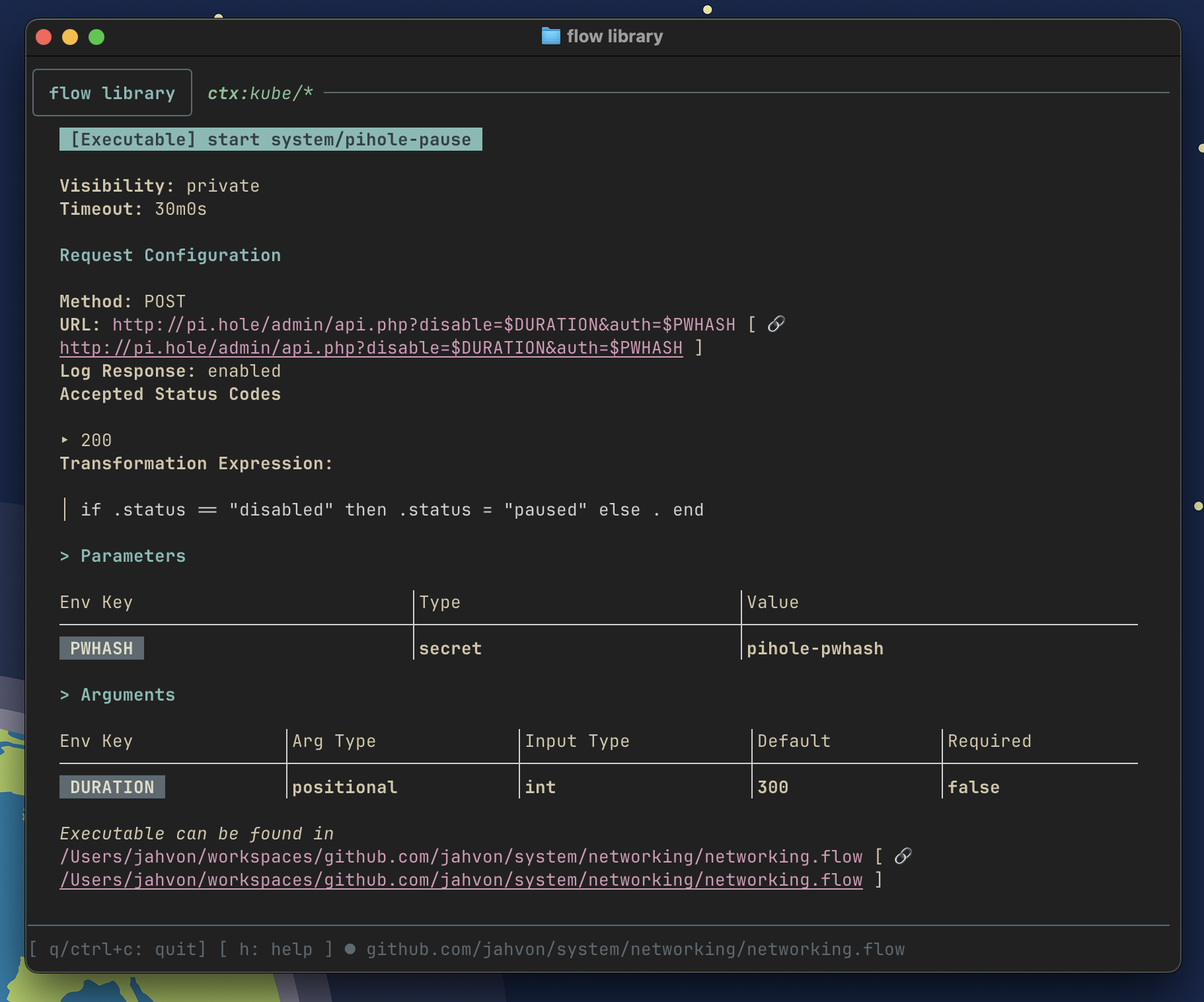

As my needs evolved, I added more executable configurations and types. Here’s an example of a common request executable I use to pause my home’s pi.hole blocking:

executables:

# flow pause pihole

- verb: pause

name: pihole

request:

method: "POST"

args:

- pos: 1

envKey: DURATION

default: 300

type: int

params:

- secretRef: pihole-pwhash

envKey: PWHASH

url: http://pi.hole/admin/api.php?disable=$DURATION&auth=$PWHASH

validStatusCodes: [200]

logResponse: true

transformResponse: if .status == "disabled" then .status = "paused" else . end

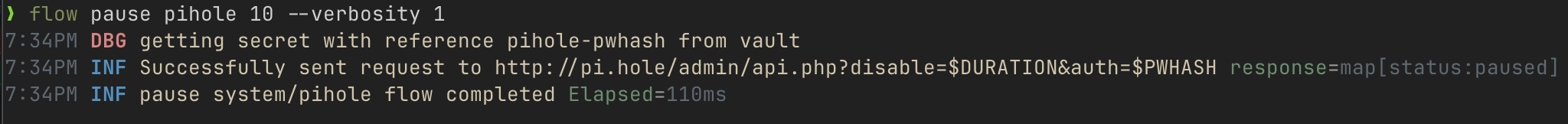

Here’s an example of the log output:

Lessons Learned

Building flow has been an incredible opportunity to deepen my understanding of Go and its ecosystem. Here are a few key lessons that have significantly shaped how I write Go now:

Small Packages and Interfaces Made Testing a Breeze

This approach makes testing easier, improves code organization, and makes refactoring as ideas evolve a joy.

Each executable type has its own Runner, making it simple to extend the system with new types. Here’s a snippet that demonstrates the ease of using this type when assigning an executable to a Runner:

//go:generate mockgen -destination=mocks/mock_runner.go -package=mocks github.com/jahvon/flow/internal/runner Runner

type Runner interface {

Name() string

Exec(ctx *context.Context, e *executable.Executable, eng engine.Engine, inputEnv map[string]string) error

IsCompatible(executable *executable.Executable) bool

}

This interface allows me to easily add new executable types by just implementing these three methods. The core execution logic remains clean and extensible:

func Exec(

ctx *context.Context,

executable *executable.Executable,

eng engine.Engine,

inputEnv map[string]string,

) error {

var assignedRunner Runner

for _, runner := range registeredRunners {

if runner.IsCompatible(executable) {

assignedRunner = runner

break

}

} if assignedRunner == nil {

return fmt.Errorf("compatible runner not found for executable %s", executable.ID())

}

if executable.Timeout == 0 {

return assignedRunner.Exec(ctx, executable, eng, inputEnv)

}

done := make(chan error, 1)

go func() {

done <- assignedRunner.Exec(ctx, executable, eng, inputEnv)

}()

select {

case err := <-done:

return err

case <-time.After(executable.Timeout):

return fmt.Errorf("timeout after %v", executable.Timeout)

}

}

For testing, I use ginkgo for its expressive BDD-style syntax and GoMock to generate a mock runner. This mock simulates serial and parallel execution without the complexity of managing real subprocesses or network calls. This approach has been invaluable for verifying complex concurrent behaviors, especially when testing features like timeout handling, parallel execution limits, and failure modes in a reliable, repeatable way.

Build Better Abstractions with Service Layers

When working with third-party modules or I/O components, wrapping your interaction with a service layer is invaluable. It keeps business logic decoupled from implementation details and simplifies testing and refactoring. In flow, I use this pattern extensively for components like shell operations, file system operations, and process management.

For example, my run service abstracts away the complexities of running shell operations with the github.com/mvdan/sh library. This means if I need to change how shell commands are executed or add new shell features, I only need to update the service implementation, not the core application logic. You can explore some of my service implementations in the source code.

Good Tools Are Worth the Investment

Investing in custom tooling or incoproating open source, especially for patterns like code generation, can significantly streamline your development workflow and reduce boilerplate. In flow, I define all types in YAML and use go-jsonschema for codegen.

Here’s an example of how my Launch executable type is defined:

LaunchExecutableType:

type: object

required: [uri]

description: Launches an application or opens a URI.

properties:

params:

$ref: '#/definitions/ParameterList'

args:

$ref: '#/definitions/ArgumentList'

app:

type: string

description: The application to launch the URI with.

default: ""

uri:

type: string

description: The URI to launch. This can be a file path or a web URL.

default: ""

wait:

type: boolean

description: If set to true, the executable will wait for the launched application to exit before continuing.

default: false

This generates both the Go type and its documentation:

// Launches an application or opens a URI.

type LaunchExecutableType struct {

// The application to launch the URI with.

App string `json:"app,omitempty" yaml:"app,omitempty" mapstructure:"app,omitempty"`

// Args corresponds to the JSON schema field "args".

Args ArgumentList `json:"args,omitempty" yaml:"args,omitempty" mapstructure:"args,omitempty"`

// Params corresponds to the JSON schema field "params".

Params ParameterList `json:"params,omitempty" yaml:"params,omitempty" mapstructure:"params,omitempty"`

// The URI to launch. This can be a file path or a web URL.

URI string `json:"uri" yaml:"uri" mapstructure:"uri"`

// If set to true, the executable will wait for the launched application to exit

// before continuing.

Wait bool `json:"wait,omitempty" yaml:"wait,omitempty" mapstructure:"wait,omitempty"`

}

I’ve also built a docsgen tool that uses this same schema to generate structured documentation. This means my types, code, and documentation all stay in sync automatically. You can see the generated type documentation for Launch here.

This investment in tooling has paid off repeatedly, especially as flow’s type system has grown more complex. It reduces errors, ensures consistency, and lets me focus on implementing features rather than maintaining boilerplate code.

The Road Ahead

Looking forward, I’m excited to explore building extensions around the flow CLI, from allowing users to BYO-vault to providing a local browser-based UI for executing workflows and discovering what’s on your machine.

flow is a reflection of my passion for crafting tools that make developers’ lives easier. What started as a personal project has grown into something I believe can help other developers take control of their development experience. I invite you to contribute, star the repo to show your support, and to open issues to report bugs or suggest features!